FEATURES|THEMES|Science and Technology

Scaling Intelligence in an AI-dominated Future

From adweek.com

From adweek.com“It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. . . . They would be able to converse with each other to sharpen their wits. At some stage, therefore, we should have to expect the machines to take control.” – Alan Turing

How seriously should we be taking artificial intelligence (AI) as a threat to humankind? The image that comes to mind when we think of AI tends to be from movies or media in which robots gain consciousness, turn evil, and exact revenge against their human creators. It is hard to objectify what robots are today when they exist, to most of us, in science fiction.

Artificial intelligence is a simulation, done mostly by computer systems to perform tasks normally requiring human capabilities, including speech recognition, decision making, and language translation. AI is already among us: it allows us to send an email, to use Internet search engines, or to make purchases with a credit card. If AI systems stopped working tomorrow, many societies would fall apart.

Computing power already supplements and provides shortcuts for our natural lives. From voice memo texts in place of face-to-face communication, map applications instead of manual navigation, and starting intimate relationships through online dating services, many of us can no longer entirely separate ourselves from technology. We are inextricably linked, blurring the line between human and machine.

My interest in artificial intelligence began in 2015. I had borrowed a book by the inventor Ray Kurzweil, whom Bill Gates described as “the best person I know at predicting the future of artificial intelligence.” In How to Create a Mind: The Secret of Human Thought Revealed (2012), Kurzweil explains how technology’s biggest goal is to artificially replicate the most complex entity on earth—the human brain.

I became hooked on the concept of a thinking computer. To me, this is the most advanced and revolutionary concern in our world today. An intelligent machine addresses the most fundamental scientific and spiritual questions: What is consciousness? Can it be replicated synthetically? And what will happen if it is?

British computer scientist and mathematician Alan Turing was the first to propose the idea of a thinking machine back in 1950. The AI that is already deeply present in our everyday lives is only a primitive version of the robot brain to which he was referring. Turing argued that there is a difference between, say, a machine learning how to play a game and actually gaining the ability to understand it. He created a tool known as the Turing test to evaluate whether a machine possesses the ability to think, measured as equal to human-level intelligence. A human subject engages in an online conversation with another human and a machine. The machine is determined to have reached human-level intelligence if the human subject cannot tell which is the computer and which is human.

From capterra.com

From capterra.comReaching artificial general intelligence

The AI community defines the human level of intelligence as equal to artificial general intelligence (AGI). This level requires high levels of computing power, data storage, and an integration of several complex systems of learning. Teaching a machine to actually learn and assimilate knowledge means it needs more than one area of expertise, which is what we see from our AI today. Today, no machine has passed the Turing test and reached AGI.

The point of developing artificial intelligence is to defy limits. Leaders in the field are aware that it is possible for AI to reach human-level intelligence. But they are divided on whether this is something we should pursue. For one, the idea of creating an artificial human brain seems far-fetched; there is still much we do not know about our brains and how they function. Mathematicians and computing specialists believe that although the brain is incredibly complex, it can be understood as an information-processing system. AI is modeled to mimic a human thought process, but not necessarily to possess it.

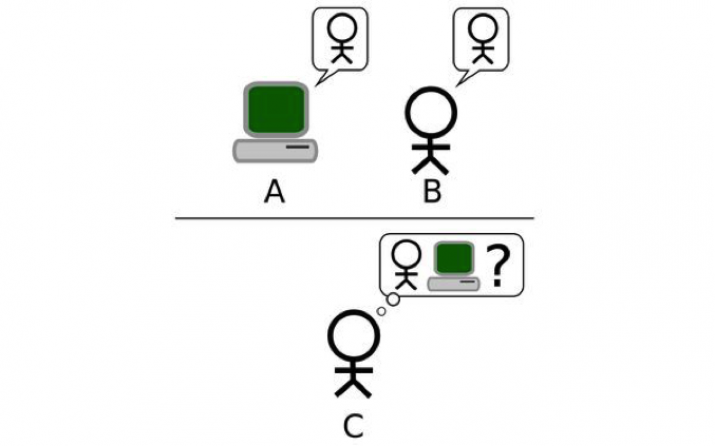

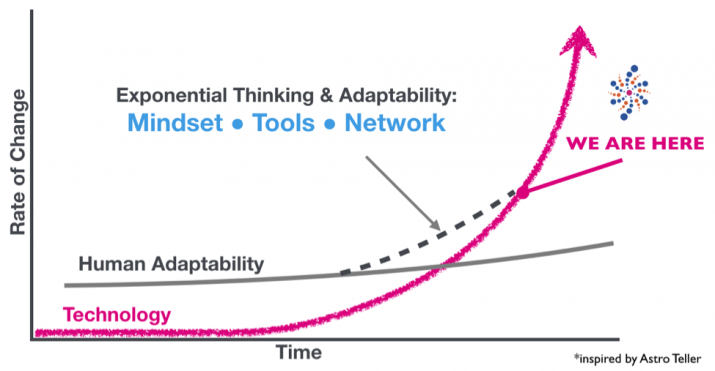

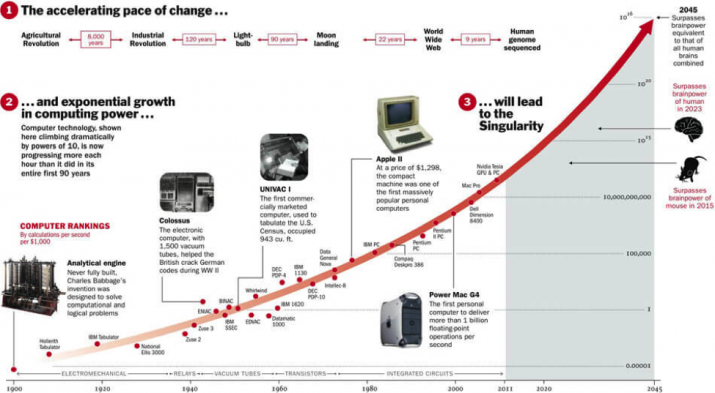

Some argue that AI only presents unfulfilled promises, and that the step from what we have now to general intelligence is more difficult than AI leaders claim. However, technological development follows an exponential pattern of growth, meaning that a few small steps of progress lead to larger and larger leaps of progress.

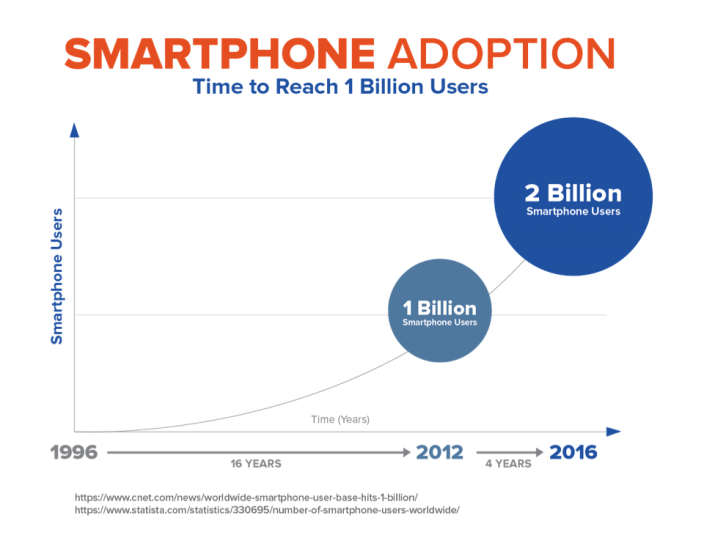

From su.org

From su.orgThe smartphone revolution is a good example of this exponential growth. The first iPhone was released in 2007. Only a decade later, iPhones and mobile techology as a whole have become an essential part of society. A substantial number of people rely on this personal computing device, dedicating hours to it every day. Often, we spend our first and last waking moments staring into our phone screens.

The iPhone that Steve Jobs launched 12 years ago looks archaic compared with the increasingly-powerful and impressive smartphones being sold today. As the graphic below explains, computer technology increases by the power of 10 over time, starting in the early 1900s. Today, it is at the point of progressing more each hour than it did in the entirety of the first 90 years of its existence. Therefore, with each year that passes, the likelihood of AGI, or “strong AI,” increases dramatically.

What this demonstrates is that the path of exponential growth is rapid, astonishing, and unpredictable. We now have raw computing power at a less expensive and more realistic rate.

From cnet.com

From cnet.comEvolution versus exponential: learning from flesh and blood

What is hardest for a machine to learn comes easiest to humans. And what is hard for us appears to be a breeze for them. It is almost criminally easy for a machine to beat a world champion at chess, or an e-sports (video game) team at a strategic game. Yet it is extremely difficult for a machine to recognize sarcasm, navigate a crowded street, or learn from experience.

The natural ability of the human brain to make small distinctions (coordination, decision-making, long-term planning) is a result of millions of years of evolution, something that is nearly impossible to instill in a machine artificially. But thanks to exponential growth, computers are now powerful enough to take advantage of learning techniques that were previously unsuccessful.

While humans have the advantage of those millions of years of evolution, it happens at an extremely slow pace. Meanwhile, robots are able to improve upon their own design much more rapidly and have no reason to stop self-improving. At this point, smart computers will be the ones creating smarter computers, not humans, so safeguards will be difficult to implement.

AI already presents real danger, from decreasing employment to the possibility of an AI arms race between countries. But if AI is able to reach human intelligence through machine learning, or being programmed to continuously self-improve, then its rise will either be the best thing to ever happen to us, or the worst. It might even be the last.

The development of AI regulation and ethics, whether in national jurisdictions or even at the transnational level, wuch as at the United Nations, is moving more slowly than AI development itself. Experts lack consensus on two topics: when AI will reach AGI, and if it will be dangerous. These vital questions must be answered before we continue to blindly move AI forward.

From medium.com

From medium.comDo we know what we are doing?

We cannot decide that we thoroughly understand AI too quickly. If we are not active in protecting ourselves from the potential negative outcomes of new learning systems, we could be outpaced by “the machines” and left with no control. AI spiraling out of control is most likely not going to be because of a scientist with malicious intent, but rather a scientist falling prey to unintended consequences. Only half of AI developers are confident that aligning a computer’s goals with our own will be easy, while others see that intelligence and subservience do not necessarily go together. As Norbert Wiener, a theorist on intelligence who instigated the evolution of AI, said: “The future will be an ever-more-demanding struggle against the limitations of our intelligence, not a comfortable hammock in which we can lie down to be waited upon by our robot slaves.” (Wiener 1964, 69)

What concerns and divides leaders in the industry is the moment that machines pass AGI and reach ASI—artificial superintelligence. Imagine an intellect that is incredibly smarter than the best human brains in every field, from scientific creativity, to general wisdom and social skills. A computer that is a trillion times more intelligent than a human is beyond our conception. We have only ever experienced ourselves as being the top species.

That said, we still have to reach AGI before superintelligence becomes a possibility. Furthermore, the whole reason why AI is being developed is because it will potentially transform human life on an unprecedented scale. It could help mitigate climate change, aid in dangerous emergency situations, and advance our understanding of illness and aging beyond what the human intellect is able to on its own.

More realistically, we are not going to see robots evolve as an independent class but rather as a cross-breed between human and bot. The ultra-wealthy will be the first to integrate artificial parts in their bodies, to give them superhuman abilities worthy of our science fiction novels and films.

From becominghuman.ai

From becominghuman.aiCurrent progress

Despite the potential dangers ahead, things are moving and concerns are being heeded. Google has formed the world’s most powerful team of AI experts, and top robotics and machine intelligence companies. In order to join, members must agree to form an ethics board and that their work cannot be used for espionage.

DeepMind is arguably the most successful AI company in the world and is part of Google’s team. Its chief founder, Demis Hassabis, wants to build artificial scientists to address climate change, disease, and poverty—systems too complex for human scientists to solve. “If we can crack what intelligence is, then we can use it to help us solve all these other problems,” Hassabis told The New Yorker magazine.

The leaders in machine learning and the progression of AI repeatedly speak of the striking beauty in the complexity of the human brain, and with that, a conscious mind. While these researchers, mathematicians, computer experts, and programmers attempt to answer this eternal question from the perspective of science and technology, Buddhists have explored this question for the past 2,500 years. The core question that both Buddhism and AI address is: “What is the mind?”

References

Ray Kurzweil. 2012. How to Create a Mind: The Secret of Human Thought Revealed. New York: Viking Penguin.

Norbert Wiener. 1964. God & Golem, Inc.: A Comment on Certain Points Where Cybernetics Impinges on Religion. Cambridge, Massachusetts: MIT Press.

See more

The Doomsday Invention: Will artificial intelligence bring us utopia or destruction? (The New Yorker)

Buddhistdoor Global Special Issue 2019